In the dynamic landscape of digital marketing, where strategies evolve at lightning speed, A/B testing emerges as an indispensable tool for optimizing campaigns. This data-driven approach empowers marketers to make informed decisions, minimizing guesswork and maximizing the impact of their efforts. Understanding A/B testing best practices is crucial for achieving significant improvements in key performance indicators (KPIs) like conversion rates, click-through rates, and ultimately, return on investment (ROI). Whether you’re a seasoned marketer or just beginning your journey, mastering these techniques will equip you to navigate the complexities of online advertising and achieve exceptional results.

This article delves into the essential A/B testing best practices for crafting successful digital campaigns. We will explore crucial aspects of the process, from defining clear testing hypotheses and selecting the right A/B testing tools, to analyzing results and iterating on your strategies. By implementing these proven methodologies, you can transform your campaigns from average performers to high-converting powerhouses. We’ll cover topics such as defining measurable conversion goals, determining appropriate sample sizes, and understanding the nuances of statistical significance. Join us as we unlock the potential of A/B testing to elevate your digital marketing game.

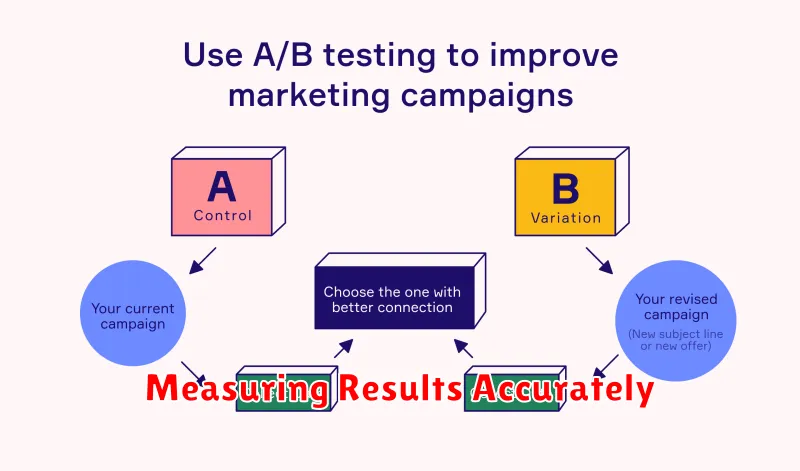

What is A/B Testing in Marketing?

A/B testing, also known as split testing, is a methodical approach to comparing two versions of a marketing asset. These versions differ in a single element, such as a headline, call to action, or image. By presenting each variation to a separate segment of your target audience, you can measure which performs better based on a predetermined metric, such as conversion rate or click-through rate.

The goal of A/B testing is to identify which version resonates more effectively with your audience and ultimately drives better results for your campaigns. It eliminates guesswork and allows for data-driven decisions in optimizing marketing materials.

Identifying Variables to Test

A crucial step in A/B testing is identifying the right variables to manipulate. Focus on elements directly impacting your campaign goals. For example, if your goal is increased conversions, test variables related to the call to action.

Key variables to consider include headlines, body copy, images, button colors, form fields, and layout. Prioritize changes with the highest potential impact. Start with one variable at a time to isolate its effect on performance.

Use data analytics to identify underperforming areas. For example, if your landing page has a high bounce rate, consider testing different headlines or layouts. A/B testing helps validate hypotheses and improve campaign effectiveness.

Setting Up a Clean Test Environment

A clean test environment is crucial for reliable A/B testing results. It ensures that external factors don’t influence your data and skew your findings. Start with a representative sample audience to avoid biased results. This sample should accurately reflect your target demographic.

Isolate your test groups as much as possible. Ensure that users in group A are not exposed to variations intended for group B, and vice versa. This prevents cross-contamination of your results and maintains the integrity of your data. Thoroughly QA your testing setup before launching. This includes verifying tracking is working correctly and variations are displaying as intended.

Measuring Results Accurately

Accurate measurement is crucial for deriving meaningful insights from A/B tests. Define your key performance indicators (KPIs) before launching your test. These KPIs should directly align with your campaign goals, whether it’s click-through rate, conversion rate, or average order value.

Establish a statistically significant sample size to ensure the observed results are not due to random chance. Insufficient data can lead to misleading conclusions. Utilize online calculators or statistical software to determine the appropriate sample size for your test.

Choose the right statistical significance level. Commonly, a 95% confidence level (or a p-value of 0.05) is used. This means there’s a 95% probability that the observed difference between variations is real and not due to chance.

Scaling Winning Variants

After a variant is declared a winner in your A/B test, the next step is scaling it. This means implementing the changes from the winning variant across your entire campaign or website. Scaling should be a gradual process to monitor performance and minimize potential risks.

Start by rolling out the winning variant to a larger percentage of your audience. For example, if you tested on 10% of your traffic, increase implementation to 25%, then 50%, and finally 100%. This allows you to continuously track key metrics and ensure the winning variant continues to perform as expected in a broader context.

Careful monitoring is crucial during the scaling process. Observe your key metrics and be prepared to adjust your strategy if necessary. Even though a variant performed well in a smaller test, its performance can change when implemented on a larger scale.